While I was developing an application with node.js I faced the need for the inter-process communication. Usually in such cases I use Redis Pub/Sub which provides, as a bonus, the possibility of scaling across several servers. But this time the question was specifically about the local exchange and its performance.

I decided to investigate existing options for the message exchange. This task is pretty standard and it is known as IPC (Inter-process communication). How can you implement IPC in NodeJS and how performant will the solution be? Let’s find out.

Flavors of IPC

So the first option (and the baseline for the measurements) is Redis Pub/Sub. My experience with Redis suggests that there is a decent difference between tcp and unix socket modes. I’ve chosen the unix socket mode because it is more performant. On the charts below the option with Redis over unix sockets is labeled with just redis.

Next option is the Node’s standard API ChildProcess.send and process.on('message'). This mechanism uses pipes between master and child processes. On the charts below this option is labeled as native (pipe).

The last two options will be socket-based: one with tcp sockets and one with unix sockets. On the charts they are labeled as unix socket and tcp respectively.

Benchmark method and conditions

My use case included sending a message to the main process and receiving an answer from it. Therefore, I will measure the performance in pairs of in-out messages. Each test script creates one master process and W child (workers). In all tests the total number of processes is always less than the available number of cores on the test machine.

Each worker runs C asynchronous cycles of sequential sending of messages and waiting for responses. In addition, all messages contain an additional string called payload of size P.

Native ChildProcess.send uses JSON to serialize the messages. Therefore, our tests running on unix and tcp sockets were also designed to support JSON. I’ve also added another unix socket test that uses string concatenation and string split to serialize and deserialize the data. On the charts it is presented as unix socket opt.

The tests were done on node v4.4.5 and redis 3.0.

Results

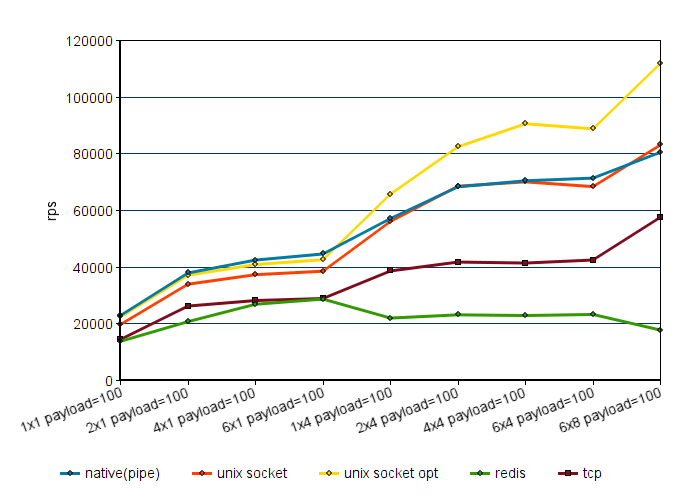

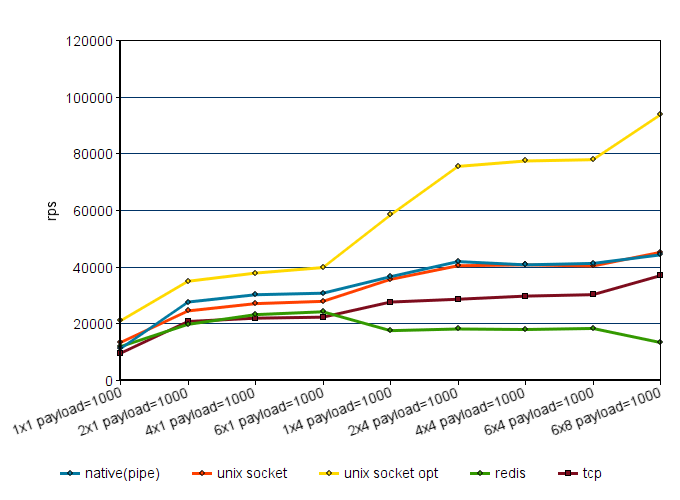

The X axis shows the type of the workload in the following format WxC payload = P format. For example, 2x4 payload = 100 means 2 workers, each of them having 4 concurrent streams and the size of the payload in every message is 100 bytes.

Conclusions

As you see, there is practically no performance difference between the native pipe and unix sockets whereas tcp is 20-40% slower.

Performance of Redis is equal to the performance of tcp if only one concurrent stream is used. But with an increase of concurrency the Redis performance doesn’t improve. Perhaps, everything depends on the incoming channel, which is common to all processes. During the tests Redis consumed up to 50% of the core’s computing power, so even with some optimization attempts one can’t really expect significant performance improvements.

Another thing I’ve discovered is that the bigger messages the better it is to replace JSON with a format that is fast to serialize. For relatively small messages and payloads the difference in performance is not that big.

By the way, an attempt to use the Buffer for serialization didn’t show better performance. In some places it was even worse compared to simple strings. Apparently, the Buffer should be used for binary data to gain real benefits.

To sum up, if you do not need to squeeze the maximum performance from your machine, the native ChildProcess.send is absolutely enough for you.

A possible drawback is that your master process gets the most of the load and can consume up to 90% of a core’s computing power. Sockets: more complex implementation, but one could get better performance and move the load to a separate process. Also it is accessible from other programs if needed (using tcp sockets it is possible to cross one system boundaries). I assume that approximately the same performance can be obtained with ZeroMQ and similar solutions.

Source code of the tests is available here.